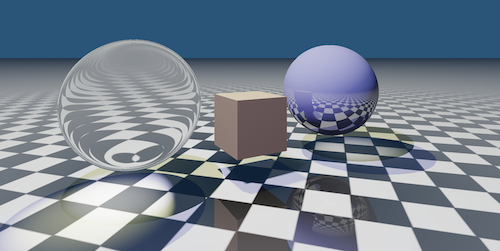

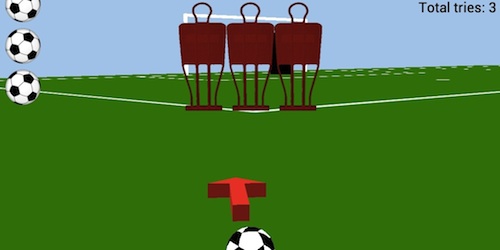

Set-Piece Specialist

Set-Piece Specialist is my first ever game, or at least the first I have ever made from scratch. You can click here and try it out, as long as you are using a WebGL capable browser. It is a free kick simulator, where the player must try to score from increasingly difficult positions. They can control the power, direction and spin of the ball, all of which affect its flight in a realistic manner. I am developing it partly to produce a physics-based demo for my course, but I hope it will become more than that. It uses three.js to help with the graphics, and cannon.js for physics. To simulate the ‘bend’ of the ball, it was necessary to implement my own solution to extend cannon.js. This was achieved by simulating the magnus effect; when a spinning ball is travelling over a certain velocity, turbulence is created on the side which is spinning against the direction of movement. By computing the cross product of positional and angular velocity, we find the effect of this turbulence. I’ve only just started developing the game over the past week, in between other things, so it is pre-alpha at this point. I will update this post as I continue to refine the gameplay and add features. More Images:

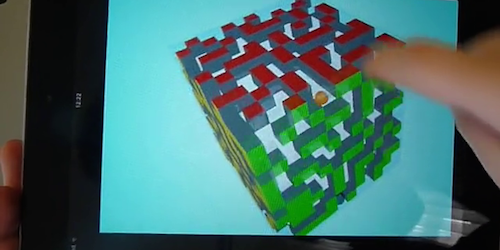

AMazeThing OSX + Kinect

This was a continuation of a previous group project, the details of which can be found here. As we had to produce an interactive application for our course which used some aspect of computer vision (the acquiring and processing of image data), we decided to port our original application to use 3D motion controls, by way of a Kinect. You can watch a live demo below: My role was to research the potential methods for Kinect integration, and I was also primarily responsible for implementing these controls into a ‘snapshot’ of the iOS version, while development continued apace on the full version. Roles were fluid though, and we often found ourselves all working on one or the other when an issue arose. We chose to implement motion controls using the ofxOpenNI addon, which allowed us to easily implement skeleton tracking, and use the co-ordinates from each joint of the skeleton as input. A gesture-based control system was designed and used to create an intuitive experience. We have continued to develop the iOS app in parallel, and hope to release it to the app store soon.

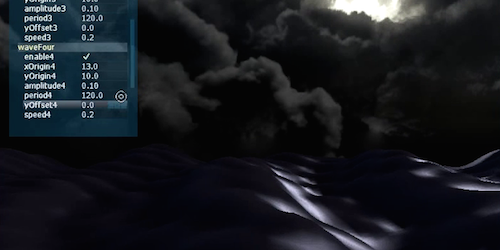

Realtime Waves in DirectX9

This was the third deliverable for the Maths and Graphics portion of my course. We were asked to produce a realtime representation of ocean waves, depicting their swells and ripples in 3D, using the RTVS_Lite framework. I produced a program which modelled waves as a 128 * 128 plane and allowed the user to adjust its appearance. You can see this program in action above. The program displaces the y position at each vertex every frame, and updating the normals accordingly so the lighting will react to the changes. The open-source library AntTweakBar is used to allow the user to easily adjust the appearance of the ocean surface. Up to 6 different waves can be added, with amplitude, wave period, and wave origin all easily adjustable. A skybox is also used to enhance the realism, with the user being able to switch between three backgrounds at will.

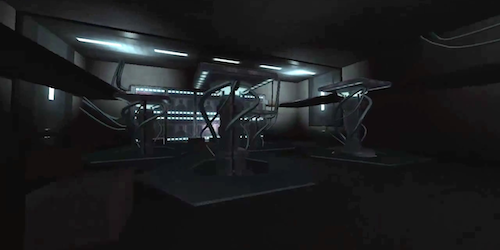

GoldEngine Game Demo

The above video is a recording of a prototype level made in Unity by the students currently enrolled on the MSc Computer Games and Entertainment at Goldsmiths University. We are working on porting this level to our own engine, and then will attempt to build as close to a full game as possible within the time constraints of the course. I am responsible for the sound design within the demo, and will also be recording and scripting sounds for the full game, as well as performing more general gameplay design and technical roles. This post will be updated as progress continues on the game, and more working examples will be shown.

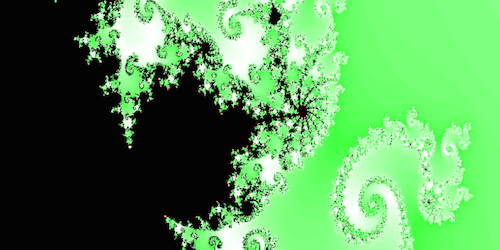

Fractal Viewer

This is a web app, and you are on the web, so you should really click here and try it. As long as you’re not on a mobile device, or using internet explorer, it should just work! Actually if you are using a more recent Android device which can run Chrome (anything with an ARMv7 processor and running 4.0+), then it should work with a flag enabled. I would like to hear from you if you try this! Are you back? Then I will tell you about about the app, how I made it, and a bit about my feelings concerning WebGL. Firstly, most of the UI is implemented using javascript and jquery, which despite me having next to no experience with I found very easy to hit the ground running with. The application was largely built during one single evening, which is testament to that. The UI passes the required values into the javascript functions I wrote, which then pass the processed data as uniforms to the fragment shader. So what we have here is an application built using a mixture of javascript and GLSL. This allows for a mixture of very quick and flexible development for the UI, and very high performance for the repetitive and costly calculations required to respond to user interaction, as they can be performed on the GPU with optimised code. So not only does it have a relatively intuitive UI which I was able to build quickly, but it can respond to user input in realtime and generate high quality images. The project is named ‘fractal viewer’ and not ‘Mandelbrot viewer’ because I hope in the future to extend the program to display other fractals. The shader aside, the framework could be applied to represent any mathematical set. I also think the colourisation and mouse controls could be improved upon. I’ll make further posts when I have time to work on it further.

AMazeThing iOS

This was a group project, with Alberto Toglia, Lorenzo Mori, Harry Evans, Will Masek and myself collaborating to create an iOS app. We were asked to produce a “creative” mobile application using openFrameworks, and chose to make this ball maze game (with a particularly clever name). openFrameworks is a cross-platform library designed to make it easier to produce portable code, and it has found widespread use in contemporary digital art. I scripted the sounds for the collision / rolling, and therefore had to implement a function which could respond to collision callbacks. This involved modifying the add-on library we were using (ofxBullet, which unsurprisingly is the Bullet physics library repurposed to hook into OF) a bit, as the standard functions weren’t giving us the data (the lifetime of each collision) we needed. I also helped design and implement the user interaction, for example the pinch to zoom function. We hope to get it up on the app store at some point, and are looking into porting it onto the Android platform. We’re also going to collaborate further to bring the application to desktop platforms, possibly with Kinect support.

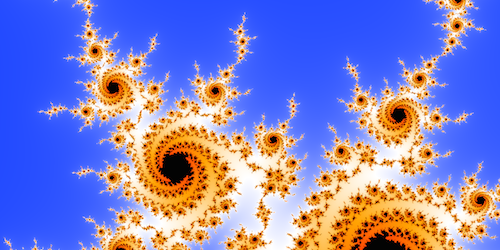

Mandelbrot Viewer

This was a group project, in which Harry Evans and myself produced a viewer for the Mandelbrot set. This was once again done using the RTVS_Lite framework, though this time we worked more directly with DirectX9, writing the image to a texture, and then drawing the texture onto a quad. In retrospect this was quite a bad idea. The performance of the app is not very good, and would have been better if we’d drawn the image using a fragment shader. I’ve since used this approach to port the viewer into a web app. However there are some nice features to the application, such as the ability to read configuration files that specify colour schemes, and the ability to ‘drag-to-zoom’. You can download the demo and source code here. DirectX9 is required. More Images:

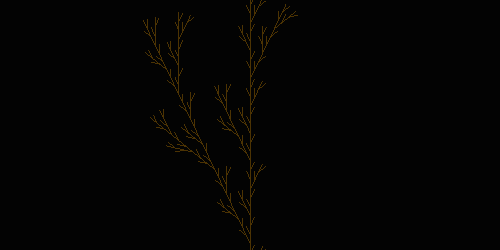

L-System Viewer

This was the first piece of coursework I submitted for the ‘Maths and Graphics’ portion of my MSc. We were asked to produce a program which could read a set of rules from a local file, and produce a graphical representation of the respective Lindenmeyer system. Known as l-systems for short, these are rewriting systems which use simple, recursively applied rules to represent complex natural phenomena. Using the RTVS_Lite framework as a starting point, I implemented a system that could write a ‘tree’ to a null-terminated string by iteratively applying a rule (or rules), which had been read from a file. I then added a parser so that the program could read this string, and produce a series of graphical primitives (lines) to represent the tree. Some parameters relating to the display of the tree, such as branch angle and zoom level, can also be adjusted by the user in realtime. They can also switch between configuration files, so they can view different sample trees. You can download the demo and source code here. DirectX9 is required. More Images: